We are pleased to release new results on approximation of deep Convolutional Neural Networks (CNN). We used Ristretto to approximate trained 32-bit floating point CNNs. The key results can be summarized as follows:

- 8-bit dynamic fixed point is enough to approximate three ImageNet networks.

- 32-bit multiplications can be replaced by bit-shifts for small networks.

Traditionally, CNNs are trained in 32-bit or 64-bit floating point. Deep CNNs are resource intense both in terms of computation and memory. To allow mobile devices to do energy-efficient inference of these networks, it is desirable to condense these models. For our experiments, we approximate the network weights and activations with much fewer than 32 bits. Moreover we analyze the impact of representing weights and activations in different number formats.

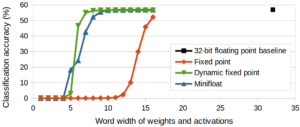

Approximation of AlexNet

The above figure shows the effect of quantizing AlexNet to different word widths, using different number formats. Dynamic fixed point proves to be the best approximation strategy at 8 bit.

We approximated three ImageNet networks with dynamic fixed point: AlexNet, GoogLeNet and SqueezeNet. We started with 32-bit floating point models from the Caffe Model Zoo and approximated them with 8-bit dynamic fixed point, at an absolute accuracy loss below 1% (see table below).

| CNN | 32-bit floating point | 8-bit dynamic fixed point |

|---|---|---|

| AlexNet | 56.90% | 56.14% |

| GoogLeNet | 68.93% | 68.37% |

| SqueezeNet | 57.68% | 57.21% |

Moreover, we approximated different networks through multiplier-free arithmetic. For LeNet, no accuracy drop is observed. For AlexNet, the accuracy drop is below 4% (see table below).

| CNN | 32-bit floating point | Multiplier-free arithmetic |

|---|---|---|

| LeNet | 99.15% | 99.15% |

| AlexNet | 56.90% | 53.18% |

We submitted a paper with more details to the IEEE Transaction on Neural Networks and Learning Systems. The paper builds on our previous ICLR extended abstract. The code of Ristretto is available on Github. For questions on Ristretto, please post on our Google group.